The Data Science Lab

Sentiment Analysis Using a PyTorch EmbeddingBag Layer

Dr. James McCaffrey of Microsoft Research uses a full movie review example to explain the natural language processing (NLP) problem of sentiment analysis, used to predict whether some text is positive (class 1) or negative (class 0).

Natural language processing (NLP) problems are very challenging. A common type of NLP problem is sentiment analysis. The goal of sentiment analysis is to predict whether some text is positive (class 1) or negative (class 0). For example, a movie review of, "This was the worst film I've seen in years" would certainly be classified as negative.

In situations where the text to analyze is long -- say several sentences with a total of 40 words or more -- two popular approaches for sentiment analysis are to use an LSTM (long, short-term memory) network or a Transformer Architecture network. These two approaches are difficult to implement. For situations where the text to analyze is short, the PyTorch code library has a relatively simple EmbeddingBag class that can be used to create an effective NLP prediction model.

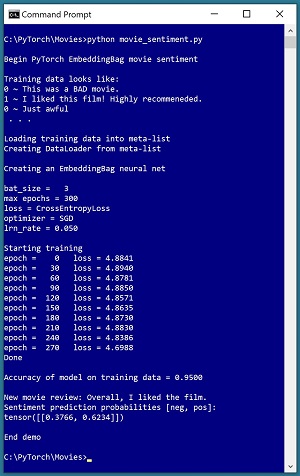

A good way to see where this article is headed is to take a look at the screenshot of a demo program in Figure 1. The goal of the demo is to predict the sentiment of a movie review. The demo training data consists of a text file of 20 very short movie reviews. The data looks like:

0 ~ This was a BAD movie.

1 ~ I liked this film! Highly recommeneded

0 ~ Just awful

. . .

Notice that the word "recommeneded" is misspelled. Dealing with misspellings is one of dozens of issues that make NLP problems difficult. The demo program loads the training data into a meta-list using a specific format that is required by the EmbeddingBag class. The meta-list of training data is passed to a PyTorch DataLoader object which serves up training data in batches. Behind the scenes, the DataLoader uses a program-defined collate_data() function, which is a key component of the system.

[Click on image for larger view.] Figure 1: Movie Review Sentiment Analysis Using an EmbeddingBag

[Click on image for larger view.] Figure 1: Movie Review Sentiment Analysis Using an EmbeddingBag

The demo program uses a neural network architecture that has an EmbeddingBag layer, which is explained shortly. The neural network model is trained using batches of three reviews at a time. After training, the model is evaluated and has 0.95 accuracy on the training data (19 of 20 reviews correctly predicted). In a non-demo scenario, you would also evaluate the model accuracy on a set of held-out test data to see how well the model performs on previously unseen reviews.

The demo program concludes by predicting the sentiment for a new review of, "Overall, I liked the film." The prediction is in the form of two pseudo-probabilities with values [0.3766, 0.6234]. The first value at index [0] is the pseudo-probability of class negative, and the second value at [1] is the pseudo-probability of class positive. Therefore, the prediction is that the review is positive.

This article assumes you have an intermediate or better familiarity with a C-family programming language, preferably Python, and a basic familiarity with the PyTorch code library. The complete source code for the demo program is presented in this article and is also available in the accompanying file download. The training data is embedded as comments at the bottom of the program source file. All normal error checking has been removed to keep the main ideas as clear as possible.

To run the demo program, you must have Python, PyTorch and TorchText installed on your machine. The demo programs were developed on Windows 10 using the Anaconda 2020.02 64-bit distribution (which contains Python 3.7.6) and PyTorch version 1.8.0 for CPU installed via pip. Installation is not trivial. You can find detailed step-by-step installation instructions for installing Python and PyTorch in my blog post.

The TorchText library contains hundreds of useful classes and functions for dealing with natural language problems. The demo program uses TorchText version 0.9 which has many major changes from versions 0.8 and earlier. After installing Python and PyTorch you can install TorchText 0.9 by going to the web site and then downloading the appropriate whl file for your system, for example, torchtext-0.9.0-cp37-cp37m-win_amd64.whl for Python 3.7 running on Windows. After you download the whl file, you can install TorchText by opening a shell, navigating to the directory containing the whl file, and issuing the command "pip install (whl file)."

Note that this article is significantly longer than any other article in the Visual Studio Magazine Data Science Lab series. I originally split this article into three smaller articles but because NLP problems are very complex and their components (tokenizers, vocabulary objects, collating functions and so on) are tightly coupled, a series of articles made the topic more difficult, rather than easier, to understand. The moral of the story is that if you are not familiar with NLP, be aware that NLP systems are usually much more complicated than tabular data or image processing problems.

The Movie Review Data

The movie review data used by the demo program is artificial. There are just 20 items. In a non-demo scenario, you want at least several hundred training items and preferably many thousand. The 20-item training data is:

0 ~ This was a BAD movie.

1 ~ I liked this film! Highly recommeneded.

0 ~ Just awful

1 ~ Good film, acting

0 ~ Don't waste your time - A real dud

0 ~ Terrible

1 ~ Great movie.

0 ~ This was a waste of talent.

1 ~ I liked this movie a lot. See it.

1 ~ Best film I've seen in years.

0 ~ Bad acting and a weak script.

1 ~ I recommend this film to everyone

1 ~ Entertaining and fun.

0 ~ I didn't like this movie at all.

1 ~ A good old fashioned story

0 ~ The story made no sense to me.

0 ~ Amateurish from start to finish.

1 ~ I really liked this move. Lot of fun.

0 ~ I disliked this movie and walked out.

1 ~ A thrilling adventure for all ages.

The data separates the item 0-1 label from the item text using a "~" character because a "~" is less likely to occur in a movie review than other separators such as a comma or a tab.

In order to create an NLP sentiment analysis prediction model using a neural network with an EmbeddingBag layer, you must understand:

- How to create and use a tokenizer object

- How to create and use a Vocab object

- How to create an EmbeddingBag layer and integrate it into a neural network

- How to design a custom collating function for use by a PyTorch DataLoader object

- How to design a neural network that uses all these components

- How to train the network

- How to evaluate the prediction accuracy of the trained model

- How to use the trained model to make a prediction for a new, previously unseen movie review

- How to integrate all the pieces into a complete working program

All of this sounds a bit more intimidating than it really is. The entire demo program is just over 200 lines of code. If you methodically examine each of the nine steps as presented in this article, you will have all the knowledge you need to create a custom sentiment analysis system for short-input text.

The complete source code is presented in Listing 8 at the end of this article. If you learn like I do, a good strategy for understanding this article is to begin by getting the complete demo program up and running.

1. Understanding Tokenizers

Loosely speaking, a tokenizer is a function that breaks a sentence down to a list of words. In addition, tokenizers usually normalize words by converting them to lower case. Put another way, a tokenizer is a function that normalizes a sequence of tokens, replaces or modifies specified tokens, splits the tokens, and stores them in a list.

Suppose you have a movie review like:

This movie was BAD with a capital B! <br /> Don't waste your time.

The "basic_english" tokenizer which is used in the demo program would return a list with 16 tokens:

[this movie was bad with a capital b ! don ' t waste your time .]

Words have been converted to lower case, consecutive whitespace characters such as spaces have been reduced to a single space, and the HTML <br /> tag has been removed (actually converted to a single blank space character). Notice that the exclamation point, single-quote and period characters are considered individual tokens.

When working with NLP, you can either use a library tokenizer or implement a custom tokenizer from scratch. The demo program uses a tokenizer from the TorchText library. The statements that instantiate a tokenizer object are:

import torchtext as tt

g_toker = tt.data.utils.get_tokenizer("basic_english")

The leading "g_" indicates that the tokenizer object has global scope. The reason why a global tokenizer is needed is explained later in this article. The tokenizer could be used like this:

review = "This movie was great!!"

tok_lst = g_toker.tokenize(review) # list of words/tokens

The TorchText basic_english tokenizer works reasonably well for most simple NLP scenarios. Other common Python language tokenizers are in the spaCy library and the NLTK (natural language toolkit) library.

The basic_english tokenizer performs these five operations:

- convert input to all lower case

- add space before and after single-quote, period, comma, left paren, right paren, exclamation point, question mark

- replace colon, semicolon, <br /> with a space

- remove double-quote

- split on whitespace

In some problem scenarios you may want to create a custom tokenizer from scratch. For example, in several of my NLP projects I wanted to retain the word "don't" rather than split it into three separate tokens. One approach to create a custom tokenizer is to refactor the TorchText basic_english tokenizer source code. An example is presented in Listing 1. The MyTokenizer class constructs a regular expression and the tokenize() method applies the regular expression to its input text.

Listing 1: A Tokenizer Class Based on TorchText basic_english Tokenizer

class MyTokenizer():

import re # regular expression module

def __init__(self):

self.patts = \

[r'\'', r'\"', r'\.', r'<br \/>', r',',

r'\(', r'\)', r'\!', r'\?', r'\;',

r'\:', r'\s+']

self.replaces = \

[' \' ', '', ' . ', ' ', ' , ',

' ( ', ' ) ', ' ! ', ' ? ', ' ',

' ', ' ']

self.engine = \

list((self.re.compile(p), r) for p, r in \

zip(self.patts, self.replaces))

def tokenize(self, line):

line = line.lower()

for pattern_re, replaced_str in self.engine:

line = pattern_re.sub(replaced_str, line)

return line.split()

Another approach for creating a custom tokenizer is to use simple string operations instead of a regular expression. For example, you could write a standalone function like:

def tokenize(line):

line = line.lower()

line = line.replace(".", " . ")

line = line.replace("<br />", " ")

line = line.replace('\"', "")

# etc.

return line.split()

The key takeaway is that tokenizers are not trivial, but they're not rocket surgery either. You can implement a custom tokenizer if necessary for your problem scenario, or if you want to avoid an external dependency.

2. Understanding Vocabulary Objects

An NLP vocabulary object accepts a token/word and returns a unique ID integer value. An example of creating and using a global-scope PyTorch Vocab object is:

g_vocab = make_vocab(".\\Data\\reviews20.txt") # uses g_toker

id = g_vocab["movie"]

print(id) # result is 6

token = g_vocab.itos[9]

print(token) # result is "and"

To create a PyTorch Vocab object you must write a program-defined function such as make_vocab() that analyzes source text (sometimes called a corpus). The program-defined function uses a tokenizer to break the source text into tokens and then constructs a Vocab object. The Vocab object has a member List object, itos[] ("integer to string") and a member Dictionary object stoi[] ("string to integer").

Weirdly, when you want to convert a string to an ID during model training, you do not call the stoi[] Dictionary directly, such as id = g_vocab.stoi["movie"]. Instead you call it implicitly, like id = g_vocab["movie"]. Behind the scenes, the implicit call to the Vocab object is transferred to a class __getitem__() method which in turn calls a default get() method on the stoi[] Dictionary which in turn has a default return value of 0 for words like "floogebargen" that aren't in the Dictionary and would otherwise throw a dictionary key-not-found Exception error. If you're new to Python programming, magic function calls like this can take a while to get used to.

When you want to convert an integer ID to a string, typically during debugging or system diagnostics, you must explicitly call the itos[] List, such as str = g_vocab.itos[27].

The demo program defines a make_vocab() function as shown in Listing 2.

Listing 2: The Demo Program make_vocab() Function

import torchtext as tt

import collections

def make_vocab(fn):

# create Vocab object to convert words/tokens to IDs

# assumes an instantiated global tokenizer exists

counter_obj = collections.Counter()

f = open(fn, "r")

for line in f:

line = line.strip()

txt = line.split("~")[1]

split_and_lowered = g_toker(txt) # global

counter_obj.update(split_and_lowered)

f.close()

result = tt.vocab.Vocab(counter_obj, min_freq=1,

specials=('<unk>', '<pad>'))

return result # a Vocab object