The Data Science Lab

Regression Using LightGBM

Dr. James McCaffrey of Microsoft Research presents a full-code, step-by-step tutorial on this powerful machine learning technique used to predict a single numeric value.

A regression problem is one where the goal is to predict a single numeric value. For example, you might want to predict a person's annual income from their sex, age, state of residence and political leaning. There are many machine learning techniques for regression. One of the most powerful techniques is to use the LightGBM (lightweight gradient boosting machine) system.

LightGBM is a sophisticated, open-source, tree-based system that was introduced in 2017. LightGBM can perform multi-class classification (predict one of three or more possible values), binary classification (predict one of two possible values), regression and ranking.

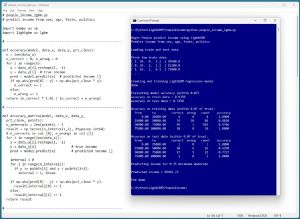

[Click on image for larger view.] Figure 1: Regression Using LightGBM in Action

[Click on image for larger view.] Figure 1: Regression Using LightGBM in Action

The best way to see where this article is headed is to take a look at the screenshot of a demo program in Figure 1. LightGBM has three programming language interfaces -- C, Python and R. The demo program uses the Python language API. The demo begins by loading the data to analyze into memory. The data looks like:

[ 1. 24. 0. 2.] | 29500.0

[ 0. 39. 2. 1.] | 51200.0

[ 1. 63. 1. 0.] | 75800.0

. . .

There are 200 items in the training dataset and 40 items in a test dataset. Each line represents a person. The predictor variables are sex, age, state and political leaning.

The demo creates and trains a LightGBM regression model. The trained model predicts the training data with 93.5 percent accuracy (187 out of 200 correct) and the test data with 72.5 percent accuracy (29 out of 40 correct). The demo defines a correct income prediction as one that's within 7 percent of the true income.

The demo concludes by predicting political leaning for a new, previously unseen person who is male, age 35, from Oklahoma, who is a political moderate. The predicted income is $49,466.72.

This article assumes you have intermediate or better programming skill with a C-family language and a basic knowledge of decision tree terminology, but does not assume you know anything about LightGBM. The entire source code for the demo program is presented in this article, and is also available in the accompanying file download. You can also find the source code and data online.

The Data

The demo program uses a 240-item set of synthetic data. The raw data looks like:

F 24 michigan 29500.00 liberal

M 39 oklahoma 51200.00 moderate

F 63 nebraska 75800.00 conservative

M 36 michigan 44500.00 moderate

F 27 nebraska 28600.00 liberal

. . .

The fields are sex (M, F), age, state (Michigan, Nebraska, Oklahoma), income and political leaning (conservative, moderate, liberal). When using LightGBM, it's best to encode categorical predictors and labels using zero-based ordinal encoding. Unlike most other machine learning regression systems, when using LightGBM, numeric predictor and target variables can be used as-is. You can normalize numeric predictors using min-max, z-score, or divide-by-constant normalization, but normalization does not help LightGBM regression models.

You can encode your data in a preprocessing step, or you can encode programmatically while the data is being loaded into memory. The demo uses preprocessing. The comma-delimited encoded data looks like:

1, 24, 0, 29500.00, 2

0, 39, 2, 51200.00, 1

1, 63, 1, 75800.00, 0

0, 36, 0, 44500.00, 1

1, 27, 1, 28600.00, 2

. . .

The 240-item encoded data was split into a 200-item set of training data to create a prediction model and a 40-item set of test data to evaluate the model.

Installing Python and LightGBM

To use the Python language API for LightGBM, you must have Python installed on your machine. I strongly recommend using the Anaconda distribution of Python. The Anaconda distribution contains a Python interpreter and roughly 500 Python packages that are (mostly) compatible with each other. The demo uses version Anaconda3-2023.09-0 which contains Python version 3.11.5. To install Anaconda on a Windows platform, go here and find installer file Anaconda3-2023.09-0-Windows-x86_64.exe (or newer). Note: it is very easy to accidentally download a version that's not compatible with your machine.

Click on the .exe file link to download it to your machine. After the file is on your machine, double-click on the file to start the GUI-based installation process. In most scenarios, you can accept all the default installation values except the one which does not add Anaconda3 to your machine's PATH environment variable -- I recommend adding so that you don't have to manually edit your system environment variables, or enter long paths on the command line.

You can find detailed step-by-step instructions for installing Anaconda Python here.

You can verify your Anaconda Python installation by opening a command shell and typing the command "python" (without quotes). You should see a reply message that indicates the version of Python, followed by the Python triple greater-than prompt. You can type "exit()" to quit the interpreter.

If you ever need to uninstall Anaconda on a Windows machine, you can do so by going to the Add or Remove Programs setting, and clicking on the Uninstall option.

At the time this article was written, the Anaconda distribution does not contain the LightGBM system, and so it must be installed separately. I strongly recommend using the pip installer program (which is included with Anaconda). To install the most recent version of LightGBM over the Internet, open a command shell and type the command "pip install lightgbm." After a few seconds, you should see a message indicating success. To verify, open a command shell and type "python." At the Python prompt, type the command "import lightgbm as L" followed by the command "L.__version__" using double underscores. You should see the version of LightGBM that is installed.

Instead of installing LightGBM over the Internet, you can first download the LigbtGBM package to your machine and then install. Go here and search for "lightgbm." The search results will give you a link to a LightGBM package page. Click on the Download Files link. You will go to a page that has a .whl file named like lightgbm-4.3.0-py3-none-win_amd64.whl that you can click on to download the file to your machine. After the download completes, open a command shell, navigate to the directory containing the .whl file, and install LightGBM by typing the command "pip install [the .whl file name]."

If you ever need to uninstall LightGBM, you can do so by typing the command "pip uninstall lightgbm." I often use the local-install technique so that I can have a copy of LightGBM on my machine available even when I'm not connected to the internet.

The LightGBM Demo Program

The complete demo program is presented in Listing 1. The demo begins by loading the training data into memory:

import numpy as np

import lightgbm as lgbm

def main():

np.random.seed(1)

train_file = ".\\Data\\people_train.txt"

test_file = ".\\Data\\people_test.txt"

x_train = np.loadtxt(train_file, usecols=[0,1,2,4],

delimiter=",", comments="#", dtype=np.float64)

y_train = np.loadtxt(train_file, usecols=3,

delimiter=",", comments="#", dtype=np.float64)

. . .

The demo does not use the NumPy random number generator directly, but it's good practice to set the generator seed value anyway in case the program is modified to use the RNG.

The demo assumes that the training and test data files are located in a subdirectory named Data. The comma-delimited data is loaded into NumPy arrays using the loadtxt() function. The predictor values in columns 0, 1, 2, 4 are loaded as type float64 and the target income values are loaded from column 3. Lines that begin with "#" are comments and are not loaded.

Listing 1: LightGBM Regression Demo Program

# people_income_lgbm.py

# predict income from sex, age, State, politics

import numpy as np

import lightgbm as lgbm

# -----------------------------------------------------------

def accuracy(model, data_x, data_y, pct_close):

n = len(data_x)

n_correct = 0; n_wrong = 0

for i in range(n):

x = data_x[i].reshape(1, -1)

y = data_y[i] # true income

pred = model.predict(x) # predicted income []

if np.abs(pred[0] - y) < np.abs(pct_close * y):

n_correct += 1

else:

n_wrong += 1

return (n_correct * 1.0) / (n_correct + n_wrong)

# -----------------------------------------------------------

def accuracy_matrix(model, data_x, data_y,

pct_close, points):

n_intervals = len(points) - 1

result = np.zeros((n_intervals,2), dtype=np.int64)

# n_corrects in col [0], n_wrongs in col [1]

for i in range(len(data_x)):

x = data_x[i].reshape(1, -1)

y = data_y[i] # true income

pred = model.predict(x) # predicted income []

interval = 0

for i in range(n_intervals):

if y >= points[i] and y < points[i+1]:

interval = i; break

if np.abs(pred[0] - y) < np.abs(pct_close * y):

result[interval][0] += 1

else:

result[interval][1] += 1

return result

# -----------------------------------------------------------

def show_acc_matrix(am, points):

h = "from to correct wrong count accuracy"

print(" " + h)

for i in range(len(am)):

print("%10.2f" % points[i], end="")

print("%10.2f" % points[i+1], end="")

print("%8d" % am[i][0], end ="")

print("%8d" % am[i][1], end ="")

count = am[i][0] + am[i][1]

print("%8d" % count, end="")

if count == 0:

acc = 0.0

else:

acc = am[i][0] / count

print("%12.4f" % acc)

# -----------------------------------------------------------

def main():

# 0. get started

print("\nBegin People predict income using LightGBM ")

print("Predict income from sex, age, State, politics ")

np.random.seed(1)

# 1. load data

# sex, age, State, income, politics

# 0 1 2 3 4

print("\nLoading train and test data ")

train_file = ".\\Data\\people_train.txt"

test_file = ".\\Data\\people_test.txt"

x_train = np.loadtxt(train_file, usecols=[0,1,2,4],

delimiter=",", comments="#", dtype=np.float64)

y_train = np.loadtxt(train_file, usecols=3,

delimiter=",", comments="#", dtype=np.float64)

x_test = np.loadtxt(test_file, usecols=[0,1,2,4],

delimiter=",", comments="#", dtype=np.float64)

y_test = np.loadtxt(test_file, usecols=3,

delimiter=",", comments="#", dtype=np.float64)

np.set_printoptions(precision=0, suppress=True)

print("\nFirst few train data: ")

for i in range(3):

print(x_train[i], end="")

print(" | " + str(y_train[i]))

print(". . . ")

# 2. create and train model

print("\nCreating and training LightGBM regression model ")

params = {

'objective': 'regression', # not needed

'boosting_type': 'gbdt', # default

'num_leaves': 31, # default

'learning_rate': 0.05, # default = 0.10

'feature_fraction': 1.0, # default

'min_data_in_leaf': 2, # default = 20

'random_state': 0,

'verbosity': -1

}

model = lgbm.LGBMRegressor(**params) # scikit API

model.fit(x_train, y_train)

print("Done ")

# 3. evaluate model

print("\nEvaluating model accuracy (within 0.07) ")

acc_train = accuracy(model, x_train, y_train, 0.07)

print("accuracy on train data = %0.4f " % acc_train)

acc_test = accuracy(model, x_test, y_test, 0.07)

print("accuracy on test data = %0.4f " % acc_test)

inc_pts = \

[0.00, 25000.00, 50000.00, 75000.00, 100000.00]

am_train = \

accuracy_matrix(model, x_train, y_train, 0.07, inc_pts)

print("\nAccuracy on training data (within 0.07 of true):")

show_acc_matrix(am_train, inc_pts)

am_test = \

accuracy_matrix(model, x_test, y_test, 0.07, inc_pts)

print("\nAccuracy on test data (within 0.07 of true):")

show_acc_matrix(am_test, inc_pts)

# 4. use model

print("\nPredicting income for M 35 Oklahoma moderate ")

x = np.array([[0, 35, 2, 1]], dtype=np.float64)

y_pred = model.predict(x)

print("\nPredicted income = %0.2f " % y_pred[0])

print("\nEnd demo ")

# -----------------------------------------------------------

if __name__ == "__main__":

main()

The test data is loaded into memory as arrays x_test and y_test in the same way as the training data. Next, the demo displays the first three lines of the training data as a sanity check:

np.set_printoptions(precision=0, suppress=True)

print("First few train data: ")

for i in range(3):

print(x_train[i], end="")

print(" | " + str(y_train[i]))

print(". . . ")

In a non-demo scenario, you might want to display all the data.

Creating and Training the LightGBM Regression Model

The demo program creates and trains a LightGBM regression model using these statements:

# 2. create and train model

print("Creating and training LightGBM regression model ")

params = {

'objective': 'regression', # not needed

'boosting_type': 'gbdt', # default

'num_leaves': 31, # default

'learning_rate': 0.05, # default = 0.10

'feature_fraction': 1.0, # default

'min_data_in_leaf': 2, # default = 20

'random_state': 0,

'verbosity': -1

}

model = lgbm.LGBMRegressor(**params)

model.fit(x_train, y_train)

The regression object is named model and is instantiated by setting up its parameters as a Python Dictionary collection named params. The main challenge when using LightGBM is wading through the dozens of parameters. The LGBMRegressor class/object has 19 parameters (num_leaves, max_depth and so on) and behind the scenes there are 57 Learning Control Parameters (min_data_in_leaf, bagging_fraction and so on), for a total of 76 parameters to deal with.